Agent-Based Models

Nick Malleson

Alison Heppenstall

School of Geography, University of Leeds, UK

These slides: http://surf.leeds.ac.uk/presentations.html

Outline

What are ABMs and why are they so popular?

What are they being used for?

Pro’s and con’s

Modelling crime

Predictive and dynamic modelling

Modelling behaviour

Challenges

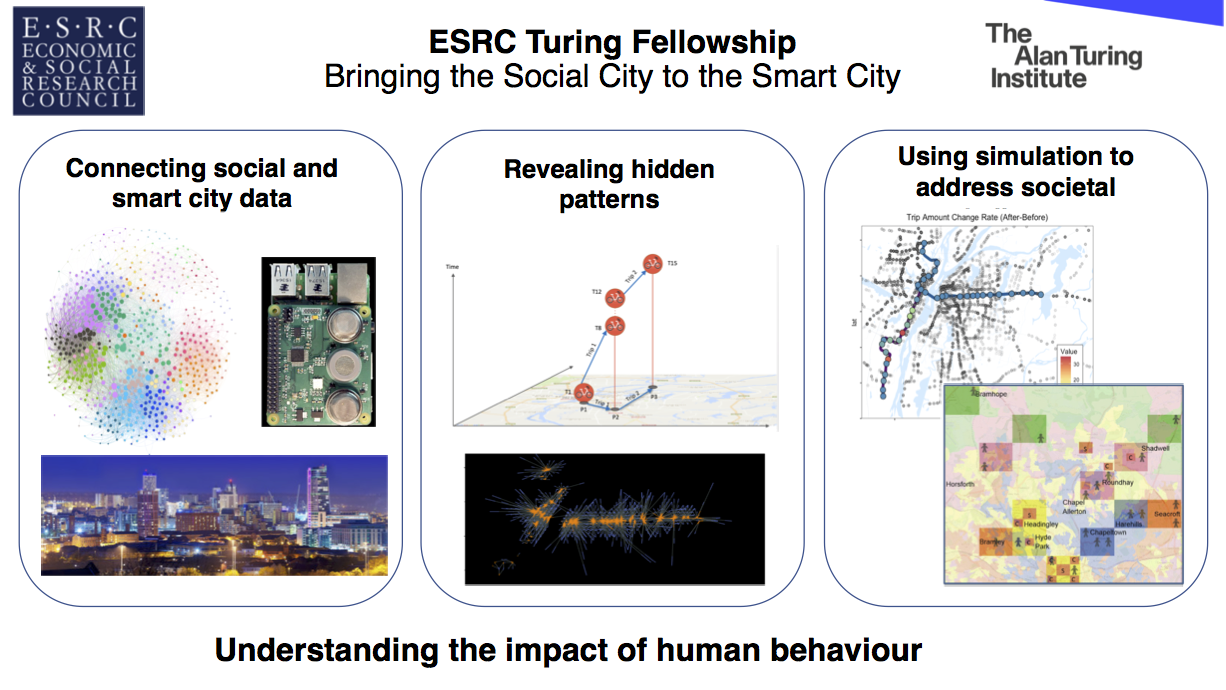

Work we are involved in

Introduction to ABM

Aggregate v.s. Individual

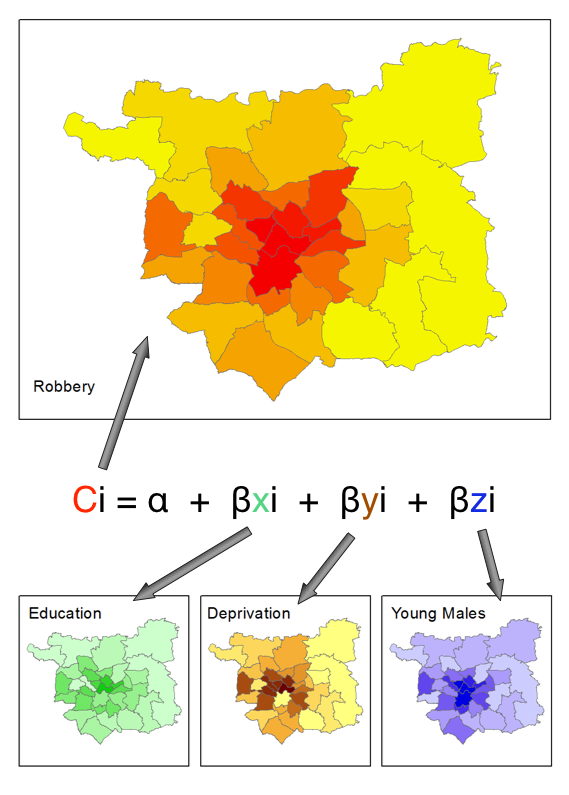

'Traditional' modelling methods work at an aggregate level, from the top-down

E.g. Regression, spatial interaction modelling, location-allocation, etc.

Aggregate models work very well in some situations

Homogeneous individuals

Interactions not important

Very large systems (e.g. pressure-volume gas relationship)

Introduction to ABM

Aggregate v.s. Individual

But they miss some important things:

Low-level dynamics, i.e. “smoothing out” (Batty, 2005)

Interactions and emergence

Individual heterogeneity

Unsuitable for modelling complex systems

Introduction to ABM

Systems are driven by individuals

(cars, people, ants, trees, whatever)

Bottom-up modelling

An alternative approach to modelling

Rather than controlling from the top, try to represent the individuals

Account for system behaviour directly

Autonomous, interacting agents

Represent individuals or groups

Situated in a virtual environment

Attribution: JBrew (CC BY-SA 2.0).

Emergence

One of the main attractions for ABM

"The whole is greater than the sum of its parts." (Aristotle?)

Simple rules → complex outcomes

E.g. who plans the air-conditioning in termite mounds?

Possible to prove the with simple computer programs

Conways 'Game of Life'

Emergence

Why is it important?

Key message: Complex structures can emerge from simple rules

Emergence is hard to anticipate, and cannot be deduced from solely analysis of an individual's behaviour

Individual-level modelling is focused on understanding how macro-level patterns emerge from micro-level through the process of simulation.

Agent-Based Modelling - Appeal

Modelling complexity, non-linearity, emergence

Natural description of a system

Bridge between verbal theories and mathematical models

Produces a history of the evolution of the system

Agent-Based Modelling - Difficulties

(actually he played with his trains...)

Tendency towards minimal behavioural complexity

Stochasticity

Computationally expensive (not amenable to optimisation)

Complicated agent decisions, lots of decisions, multiple model runs

Modelling "soft" human factors

Need detailed, high-resolution, individual-level data

Individual-level data

Creating an ABM

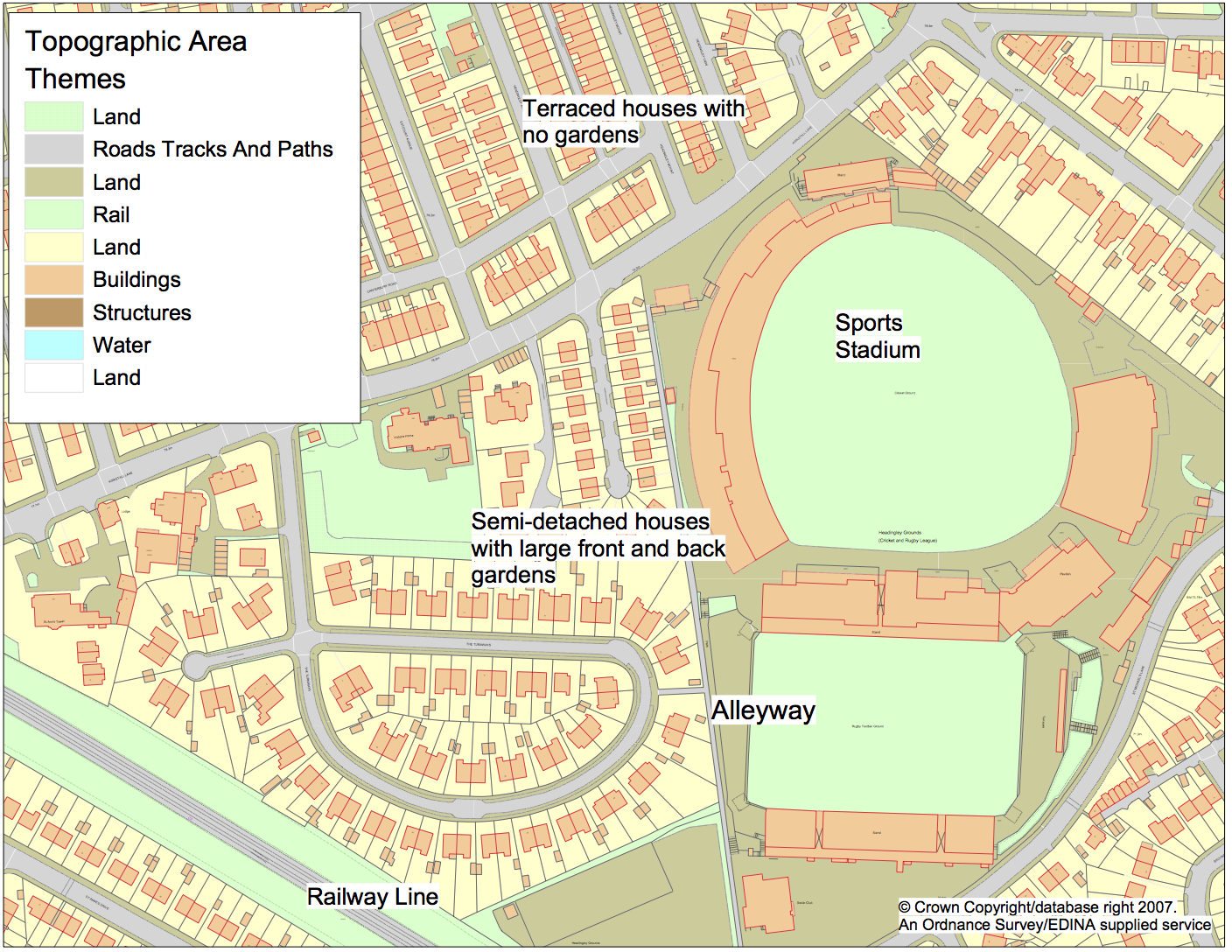

Create an urban (or other) environment in a computer model.

Stock it with buildings, roads, houses, etc.

Create individuals to represent offenders, victims, guardians.

Give them backgrounds and drivers.

See what happens.

Why Model Crime?

Exploring theory ('explanatory' models)

Simulation as a virtual laboratory.

Linking theory with crime patterns to test it.

Making predictions ('predictive' models)

Forecasting the impacts of social / environmental change.

Exploring aspects of current data patterns.

Why is it Difficult?

Extremely complex system:

Attributes of the environment (e.g. individual houses, pubs, etc.).

Personal characteristics of the potential offender and/or victim.

Features of the local community.

Physical layout of the neighbourhood.

Potential offender’s knowledge of the environment.

Traditional approaches often work at large scales, struggle to predict local effects

"Computationally convenient".

But cannot capture non-linear, complex systems.

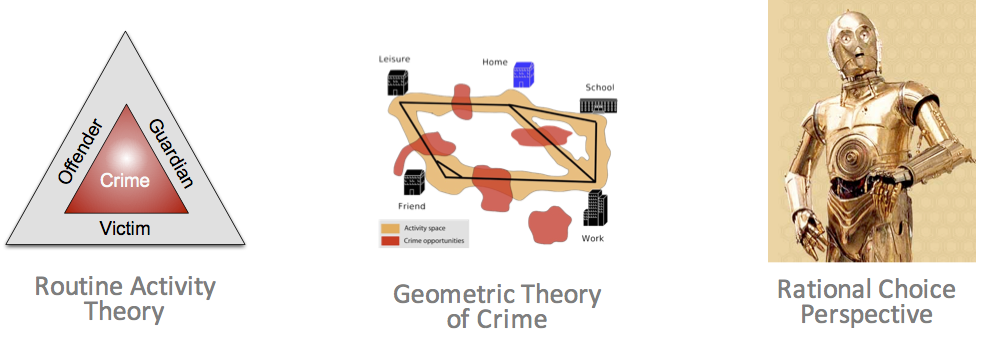

Better Representations of Theory?

Environmental Criminology theories emphasise importance of

Individual behaviour (offenders, victims, guardians)

Individual geographical awareness

Environmental backcloth

Better Representations of Space?

Lots of research points to importance of micro-level environment

Brantinghams' environmental backcloth

Crime at places research (e.g. Eck and Weisburd, 1995; Weisburd and Amram, 2014; Andresen et al., 2016)

An experiment:

Choose a number between 1 and 4 (inclusive)

Were you able to chose a number at random?

Or did most people choose the number 3?

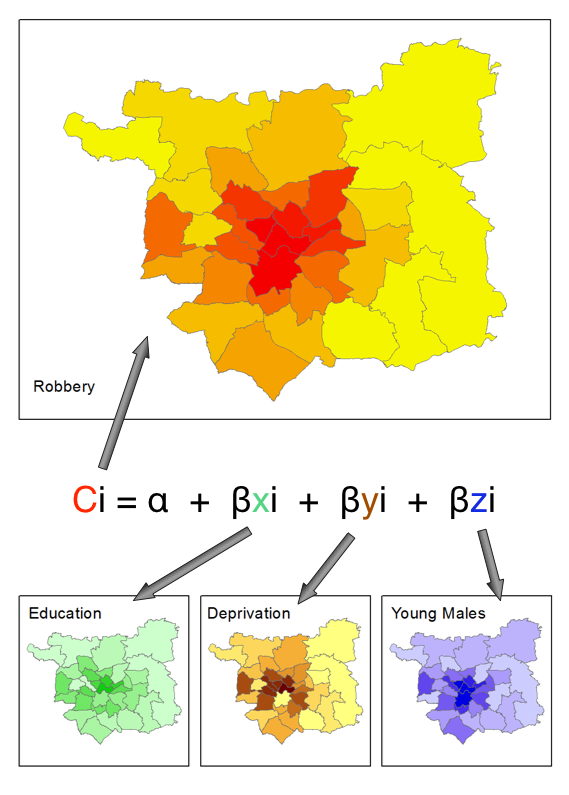

Who else is doing this?

Small, but growing, literature

(apologies to the many who are missing!)

Birks et al. 2012, 2013;

Groff 2007a,b; Hayslett-McCall, 2008

Liu et al. (2005)

Me! (Malleson et. al ...)

A special issue of the Journal of Experimental Criminology entitled "Simulated Experiments in Criminology and Criminal Justice" (Groff and Mazerolle, 2008b)

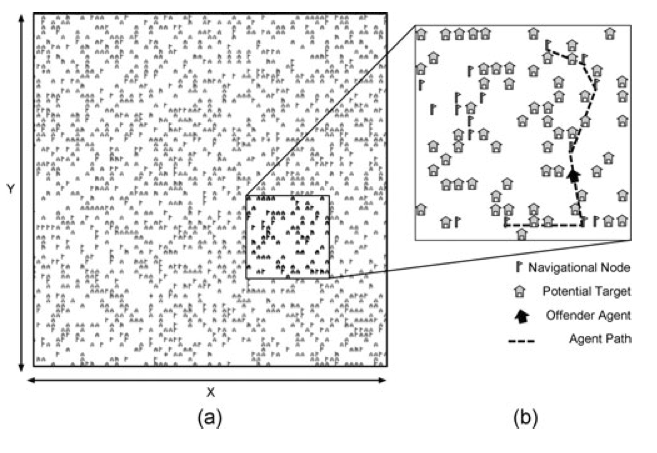

ABM Explanatory Example (Birks 2012)

Explanatory: exploring theory

Randomly generated abstract environments

Navigation nodes (proxy for transport network)

Potential targets (houses)

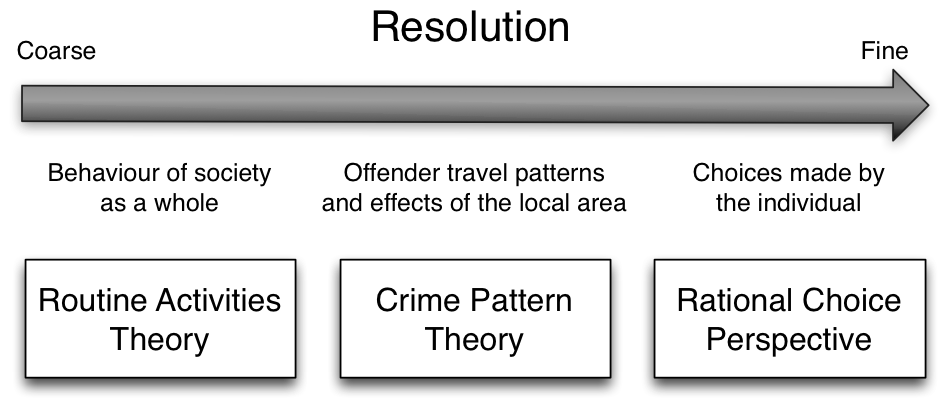

ABM Explanatory Example (Birks 2012)

One type of agent: potential offenders

Behaviour is controlled by theoretical 'switches'

Rational choice perspective (decision to offend)

Routine activity theory (how they move and encounter targets)

Geometric theory of crime (how they learn about their environment)

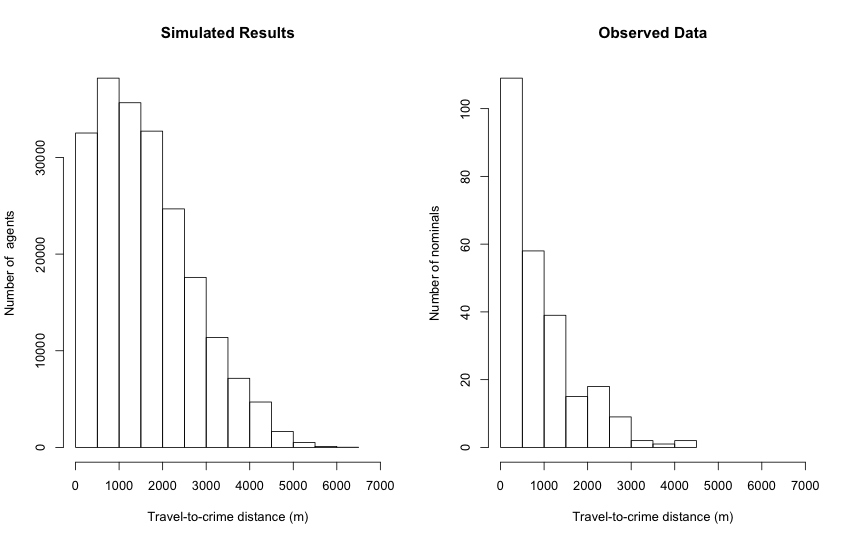

ABM Explanatory Example (Birks 2012)

Validation against stylized facts:

Spatial crime concentration (Nearest Neighbour Index)

Repeat victimisation (Gini coefficient)

Journey to crime curve (journey to crime curve)

Results:

All theories increase accuracy of the model

Rational choice had a lower influence than the others

Simple / abstract model:

Not directly applicable to practice

But simplicity allows authors to concentrate on theoretical mechanisms

Realistic backcloth might over-complicate model (Elffers and van Baal, 2008)

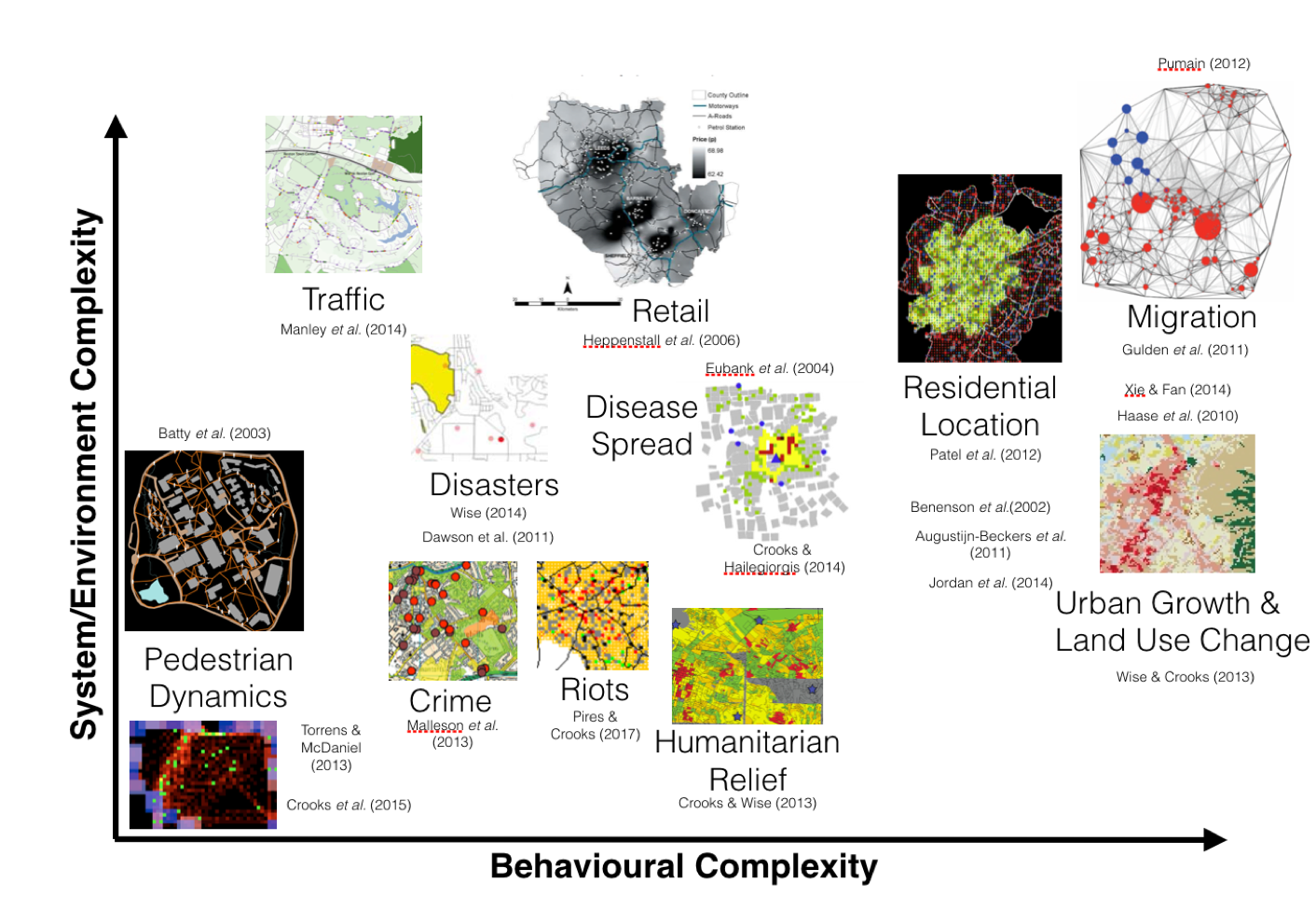

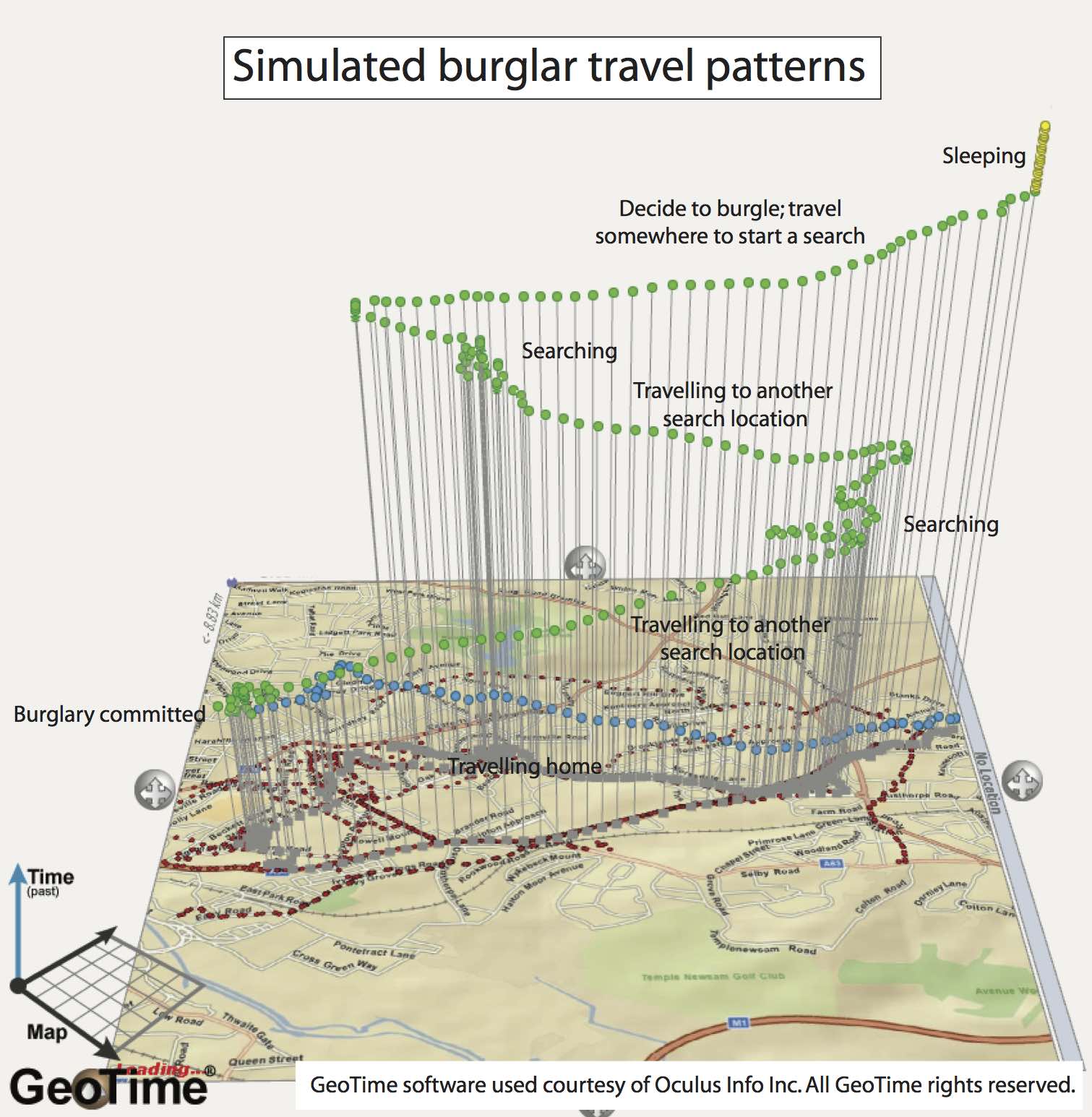

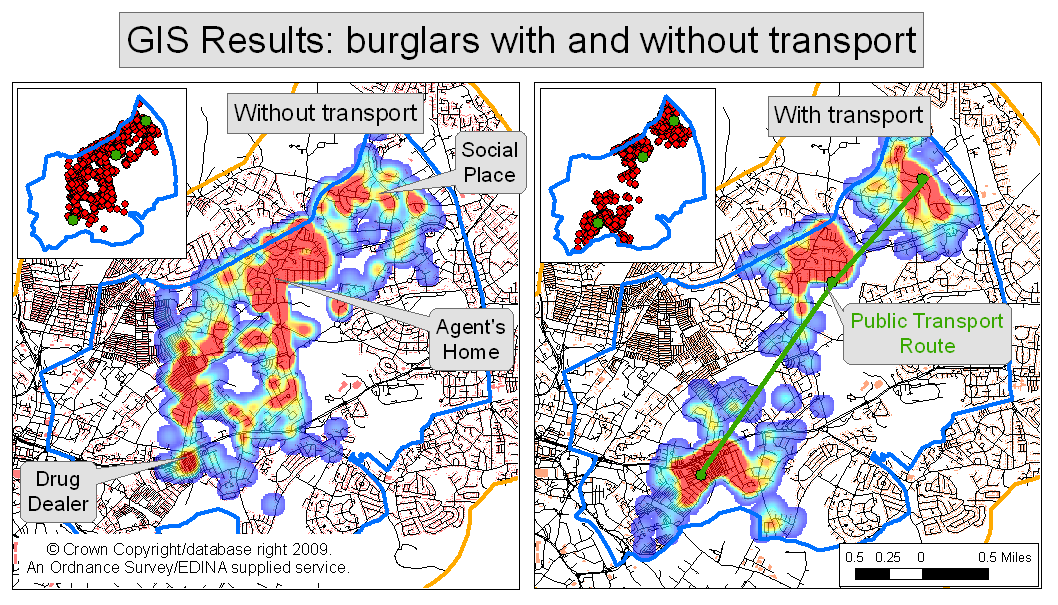

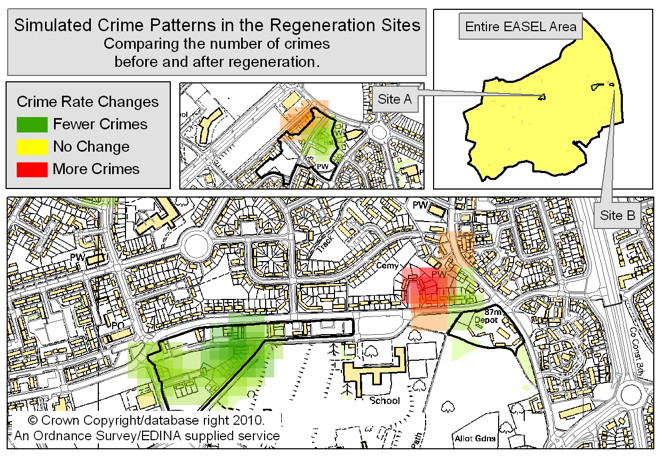

ABM Predictive Example

Predictive: exploring the real world

ABM to explore the impacts of real-world policies

Urban regeneration in Leeds

ABM Predictive Example

Awareness space test

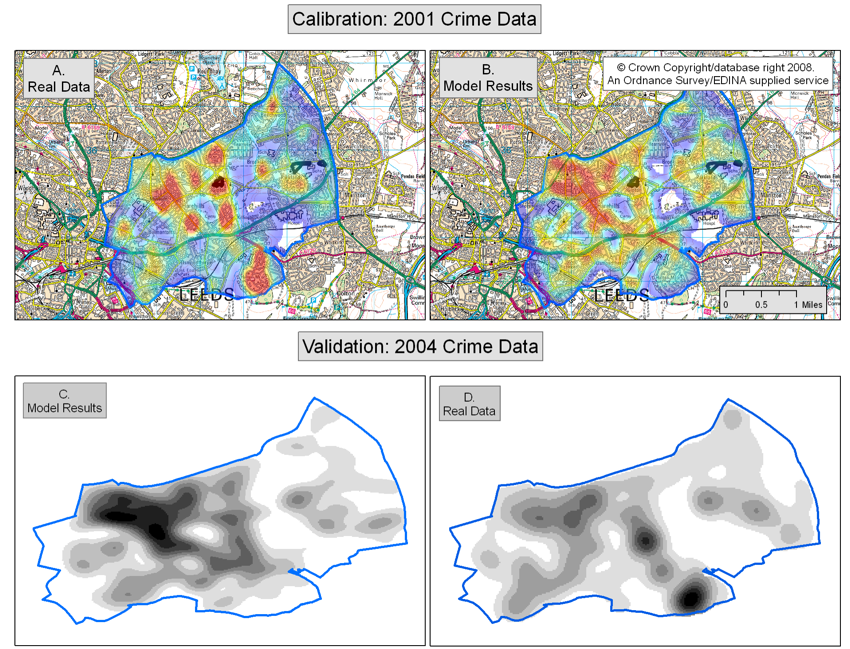

Did it work?

Aggregate results

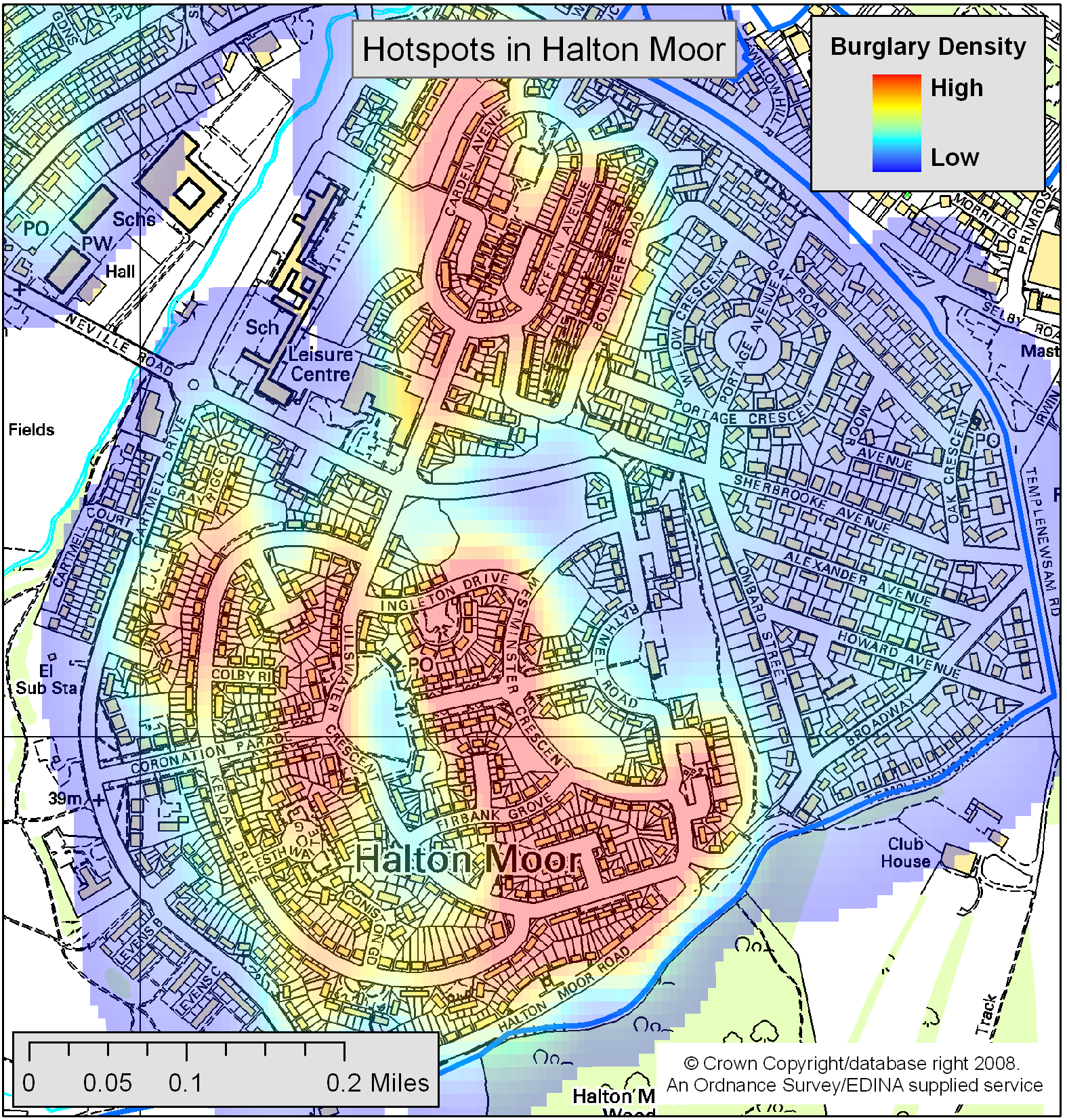

Did it work?

Halton Moor

Did it work?

Journey to Crime

ABM Predictive Example

Scenario Results

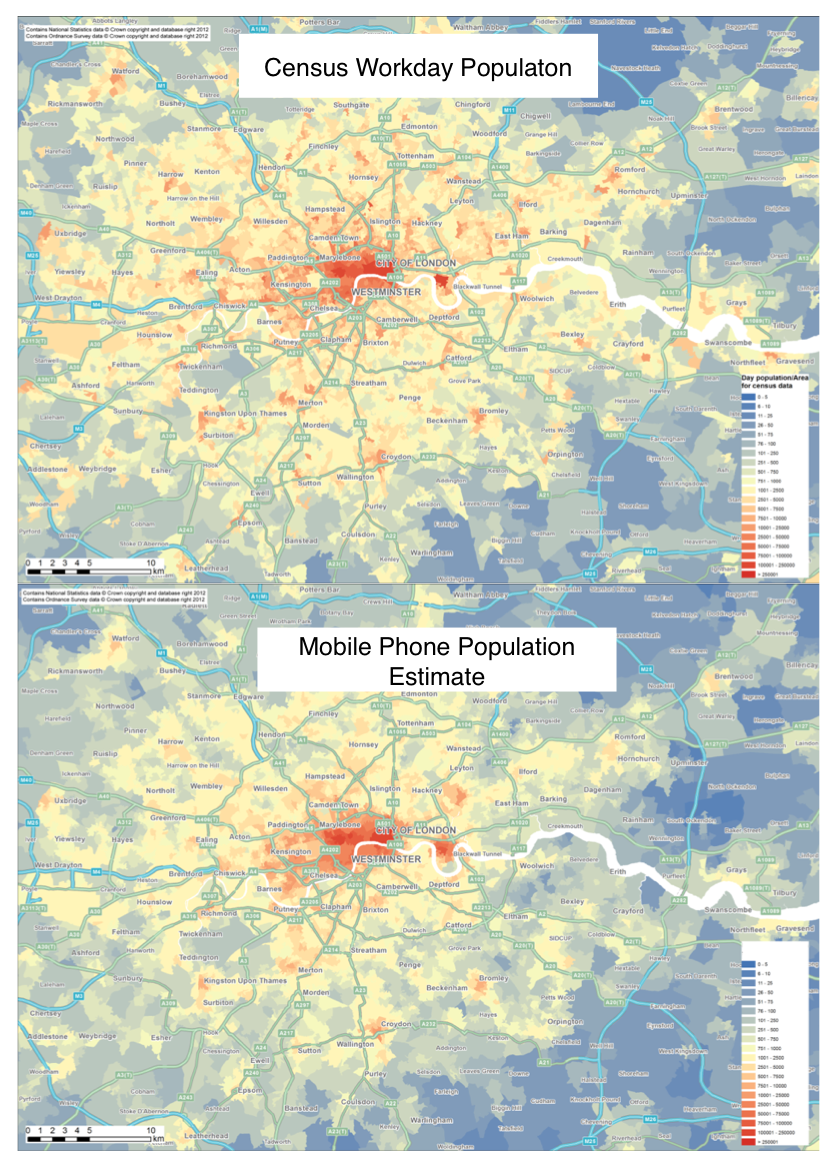

Part 2: Modelling Urban Dynamics

How many people are there in Trafalgar Square right now?

We need to better understand urban flows:

Crime – how many possible victims?

Pollution – who is being exposed? Where are the hotspots?

Economy – can we attract more people to our city centre?

Health - can we encourage more active travel?

Smart cities and the data deluge

Abundance of data about individuals and their environment

"Big data revolution" (Mayer-Schonberger and Cukier, 2013)

"Data deluge" (Kitchin, 2013a)

Smart cities

cities that "are increasingly composed of and monitored by pervasive and ubiquitous computing" (Kitchin, 2013a)

Large and growing literature

(A few) Challenges with ABM

Parameterising

Creating rule sets

Representing behaviour accurately

Calibrating and validating

Getting the right amount of detail

Reproducibility

Uncertainty

Agent-Based Urban Modelling

New project: Data Assimilation for Agent-Based Models (dust)

5-year research project (€1.5M)

Funded by the European Research Council (Starting Grant)

Started in January

Main aim: create new methods for dynamically assimilating data into agent-based models.

Quesions & Discussion

Thoughts / comments

How would you model these behaviours?